The largest model for writing code https://poe.com/Code-Llama-70B-FW - registration required

Video about the end of programming in the near future.

https://www.youtube.com/watch?v=JhCl-GeT4jw

and

https://youtu.be/Z1Ph9sISqMY

Practice using API keys? Mistral MoE on https://console.groq.com/playground is still free and without a phone number.

The video discusses the use of LangGraph for code generation. The main idea is to generate a set of solutions, rank them, and improve based on testing without human intervention

Links to the main open models for code writing: https://huggingface.co/collections/loubnabnl/code-generation-65df5c21dea2916244966bee

- also now there is StarCoder2-15B

Comparison of Codeium and GitHub CoPilot

I don't like the unclear wording of Codeium developers about what they use, because it is a system for programmers who will understand. Most likely because they can give it for free, for autocompletion a small transformer of about 2b is used, and for chat in the free version - GPT-3.5:

Codeium Autocomplete uses its own models trained from scratch in-house. There is no dependency on open models, OpenAI, or other third-party APIs. Codeium Search uses a small, local model. No third-party APIs are used for search. Codeium Chat currently uses a combination of our own proprietary chat model and third-party OpenAI APIs..

[

GitLab Duo](https://about.gitlab.com/gitlab-duo/) — a set of tools to simplify software development workflows (not only code writing) using GitLab Chat.

Among the functions - code hints, automatic code checks, performance prediction, code changes summary, vulnerability explanations and help in fixing them, test generation, and much more.

I want to share my thoughts on LLM leaderboards. The thing is, accurately testing and evaluating models is quite difficult. Performance can vary depending on the type of task and context.

I believe that there is no point in delving too deeply into a detailed comparison of the positions of models in the leaderboards. Instead, it is better to divide them into several groups: leaders, middle, and laggards. This will give a more realistic idea of their capabilities and help avoid over-fixation on minor differences in scores.

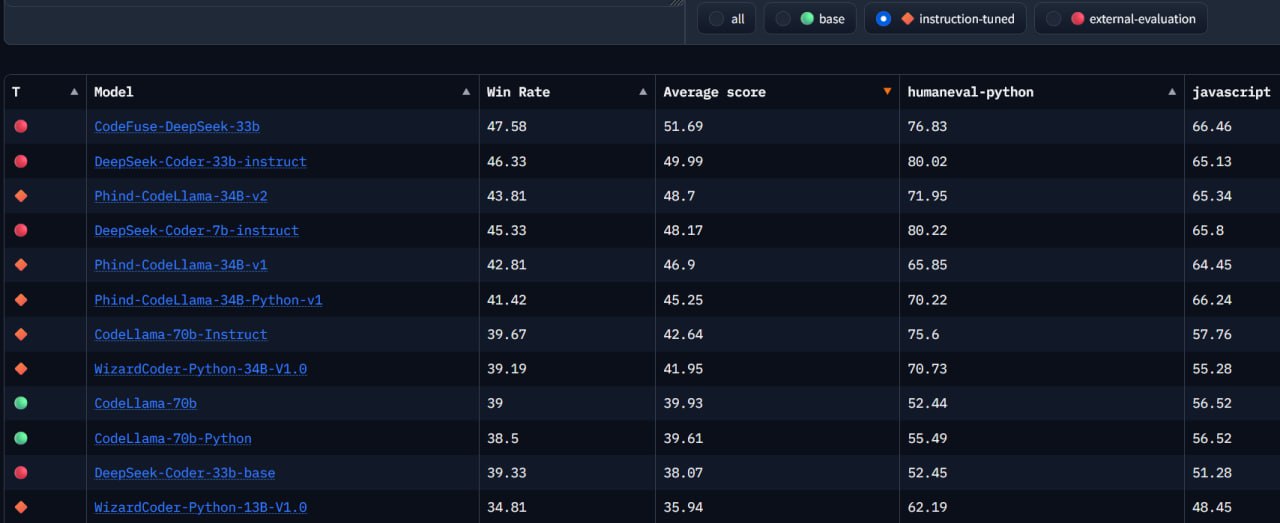

🤗 On the bigcode-models-leaderboardthere are only open models, on the screen I filtered instruct with which you can interact as in a chat, giving instructions.

In general, DeepSeek and Phind-CodeLlama of sizes 33B and 34B showed the best performance. The table does not yet have Phind-CodeLlama 70B and it is not yet known whether the developers will make it open source

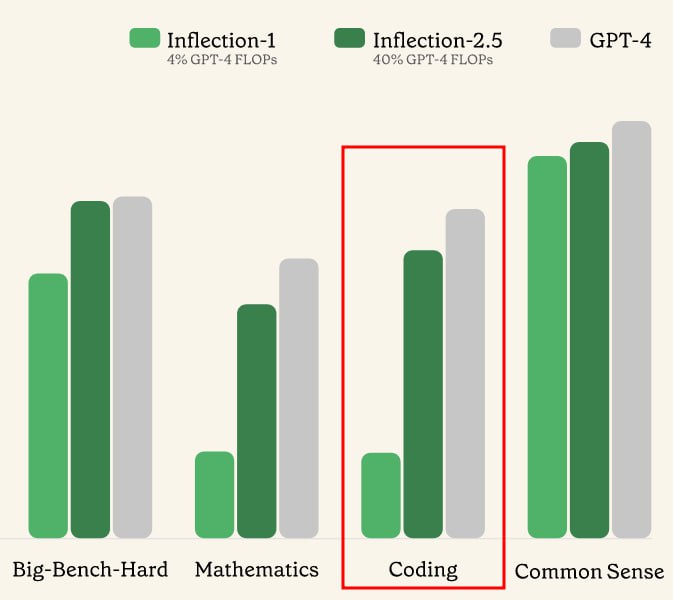

Pi.AI updated their model to version 2.5 on March 7 ("we add IQ to exceptional EQ"). It generated a working HTML calculator for me, so they are telling the truth.

The feature of Pi from the very beginning was the imitation of conversation with short answers. Free.

_Chat GPT tried to make it possible to communicate by voice on mobile clients, and then the model's output became a little different, more like a conversation - here this is the idea of the system from the beginning._Now Chat GPT can read messages by voice in the browser only with a Plus subscription, and here it is free.

During the conversation with Greg Brockman

(from OpenAI), we learn about CodeX - then a new version of a large language model focused on code generation. This took place on August 12, 2021, at an early stage in the use of such models for programming, so the conversation today has historical value.

CodeX is a descendant of GPT-3, but with numerous improvements for better understanding and code generation. The model is trained on all text and open source code on the Internet and can generate executable code based on natural language prompts.

Greg emphasizes the importance of ensuring high quality of input data and values during model training to prevent bias and unwanted behavior. He also sees the potential of CodeX in programming education, as the model can provide explanations and guidance in the form of code. At the same time, there are copyright and access issues that need to be addressed when deploying such systems.

I don't understand why it's free.

Pieces Desktop App - an application for developers

, which helps to improve the workflow using artificial intelligence. It offers functions for storing, searching, and creating code snippets (Pieces ), as well as generating unique code.

🤖 Code snippet management Ability to save code snippets from various sources, edit, and share links. Including code recognition from screenshots.

🚀 Copilot Running AI chats both local models (CPU or GPU) and from the cloud, generating code snippets based on context and saved snippets.

🔍 Search Quick access to saved snippets and search in global repositories.

🛠 Additional features Ability to view the activity of working with code snippets, updates.

https://pieces.app/

first-of-its-kind platform focused around file fragments

🎉 POE finally has DeepSeek-Coder-33B model Instruct (as of today, one of the best for code generation) and it is free! 0 points/message.

Unfortunately, a small token limit is set for the response, so sometimes you have to write "continue" in the chat.

Also, this provider (Together.ai ) also has a large Llama 70b Code Instruct for 30 points/message. Before that, it was only from the provider Fireworks for 50 points/message.

POE provides 3000 points for free every day.

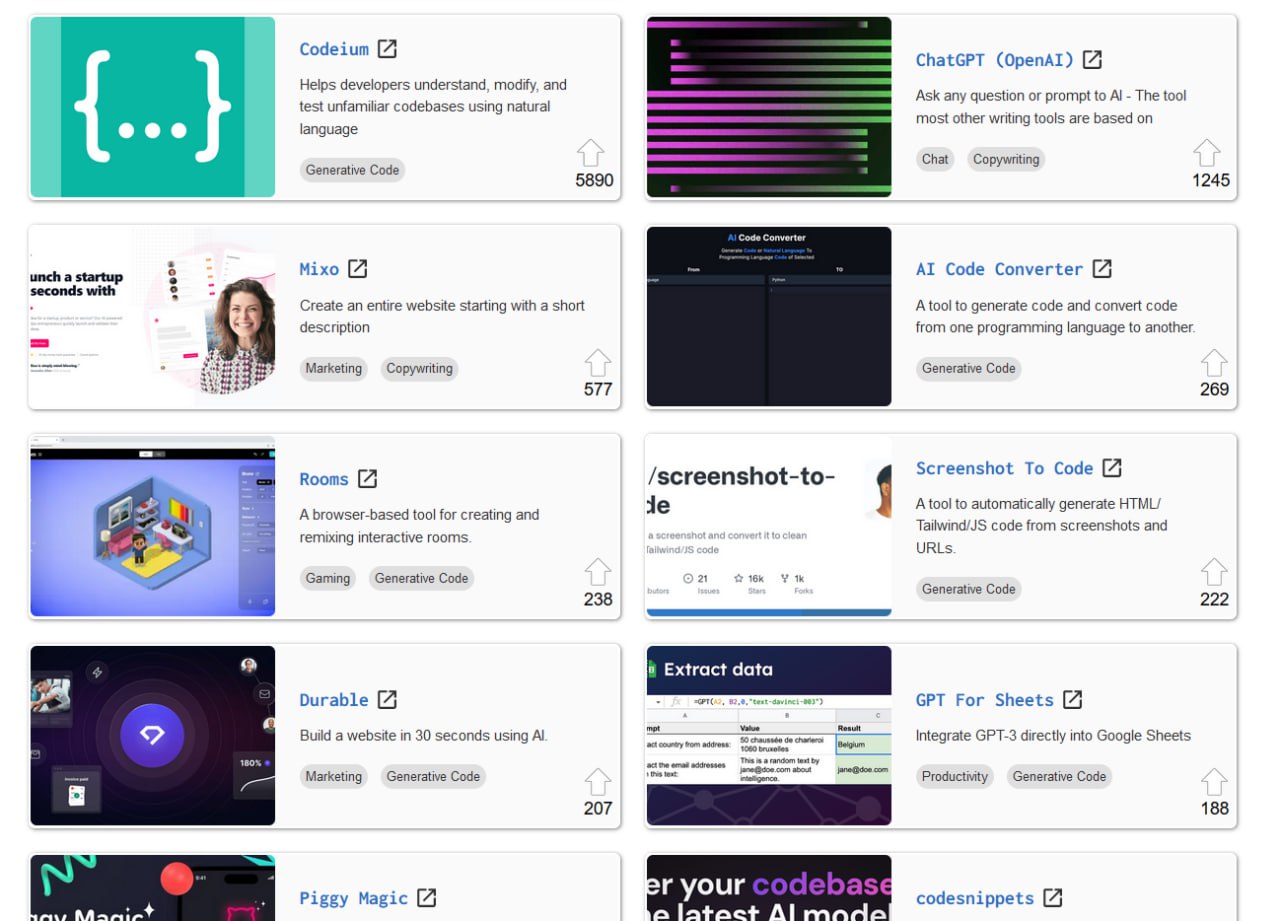

There are several catalog sites where people add and vote for tools that use artificial intelligence. We are interested in the "code generation" section.

On futuretools.io, Codeium (5890) and ChatGPT (1245) are now in first place according to user ratings.

I want to make a separate video about Codeium.

Devin "The first AI developer" - the trend of recent days, how to carol yourself a lot of money from investors, wrapping the usual gpt-4 multi-agent in a bunch of marketing hype and selected graphs/demos.

https://www.youtube.com/watch?v=AgyJv2Qelwk

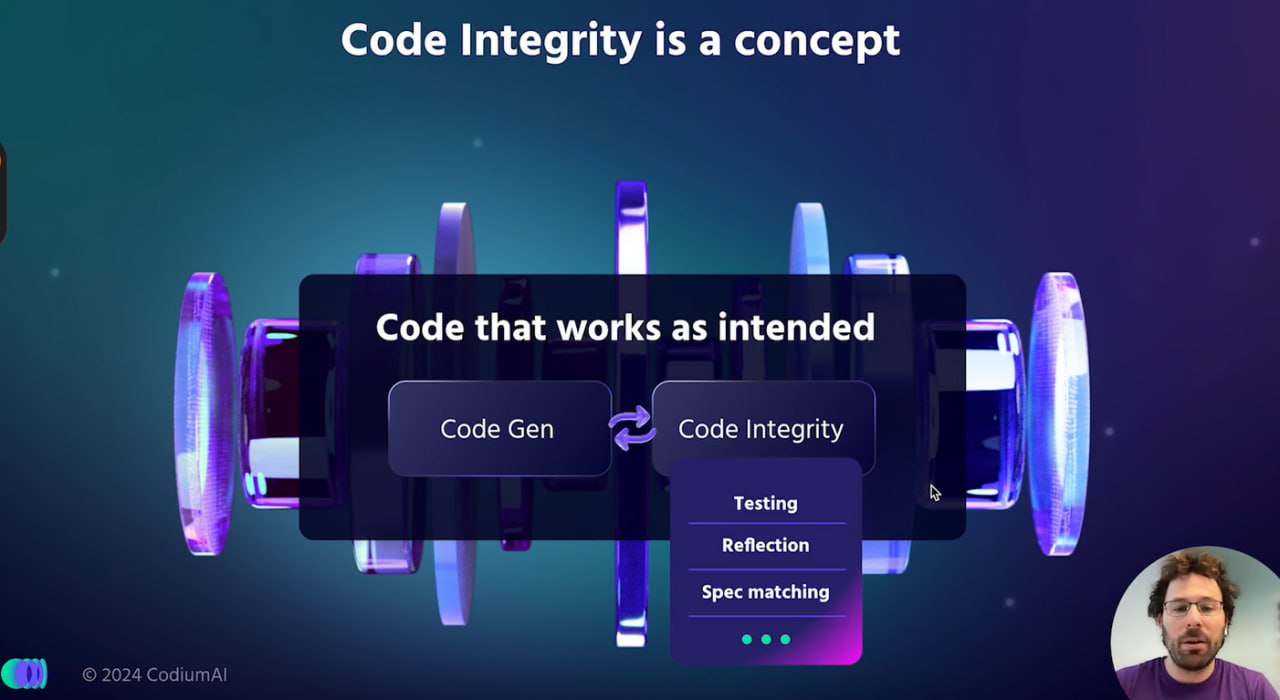

AlphaCodiumfrom CodiumAI

CodiumAI, unlike other artificial intelligence systems for working with code, chose and occupied a narrow niche - improving code quality (test generation, security and performance analysis).

Now their next step is the AlphaCodium system, which can automatically check the quality of the generated code. In this way, it is similar to how GANs work. It is open-source on the OpenAI API key, and solves problems formulated in the CodeContest format JSON.

/ blog post

From “Prompt Engineering” to "Flow Engineering”

The response of the person who creates WebGPT

to the fact that his cool code generation system is not noticed because it is part of the ChatGPT Plus subscription - he shows it and you can pause to see how he makes web requests.

In the end, he will make his own platform, which will look just like a chat.

The author of the VS Code extension Double says that he was motivated by two years of observing how the GitHub Copilot team did not fix user interface flaws:

- incorrect closing of brackets,

- poor comment autocompletion,

- lack of auto-import of libraries,

- incomplete operation of the multi-cursor mode,

and all other problems of the outdated GPT-3.5 model (because GPT-4 is used where the system decides).

The latest version of Double integrates the Claude 3 Opus model from Anthropic, which, according to some benchmarks, surpasses GPT-4. In addition to Opus, GPT-4 Turbo is also present. Double.bot was accepted into the Y Combinator accelerator. 50 requests to any of these models per month are available for free. A full subscription costs $20 (and GitHub Copilot now costs $10 without slash commands, $19 with them).

The extension is not on open-vsx.org.

To register, you need to enter the code from the SMS - it never came to my Ukrainian number.

shows the use of Tabby as a local replacement for GitHub Copilot for code autocompletion and function generation. The system will work without the Internet. The code does not leave your computer.

The StarCoder-3B model is used in VSCode. The installation and configuration of Tabby via Docker on a machine with an NVIDIA GeForce RTX 3070 GPU is considered.

🛠 Installation and configuration of Tabby via Docker

⚙️ Model selection (StarCoder, CodeLlama, DeepseekCoder)

💡 Possibility to run Tabby on a machine with a GPU or CPU - what needs to be installed for CUDA to work

The author believes that Tabby is more convenient to use than the ollama server.

Made a video review of Codeium

when compared with Cursor and Phind, the system is not interesting and it is not clear why it has so many likes

of VSCode plugins for local code generation - Continue or Twinny

Options:

🤖 Continue: everything is only through chat, no code autocompletion

🦙 Llama Coder: code autocompletion, no chat interface

🔍 Cody from Sourcegraph: pricing model is unclear

👯♂️ Twinny: a new project that 🤝 combines the features of Llama Coder and Continue - chat and code autocompletion.

_For autocompletion, so that it does not hang, of course, you need to take a smaller "base" model (1-3B) and a more powerful computer._For the chat to work, you also need to run the "instruct" model.

And I also joined this with my mouse 😉

Since the launch of the Chatbot Arena project, the GPT-4 model has always been in first place. (news )

It is interesting that if at the beginning the GPT-3.5 model was impressive, now in comparison with new models it looks very weak and gives poor results. We expect some change from the OpenAI company in the near future.

Stability AI presented, and olllama added to the repository the instruct version of their code generation model Stable Code. The model has a size of 3b and the quality of generation is at the expected level

In 13 minutes, prof. Andrew Ng (he has many courses on AI) demonstrates on slides advanced methods for using large language models to improve the software development process.

The main ideas of the speech:

🧠 Agent approaches in AI are becoming increasingly popular and effective. This is an iterative process where AI can learn, review, and improve its result.

✍️ Reflection: An AI agent can evaluate its own code/result and refine it. This increases productivity.

🤖 Multi-agent systems: using two or more agents, such as an expert coder and an expert reviewer, significantly improves the quality.

🔧 Using tools: connecting AI to various tools (web search, data analysis, etc.) expands its capabilities.

👷♂️ Planning: AI agents can autonomously plan actions and change the plan in case of failures, which is impressive.

🔀 Combination of all these approaches opens up new possibilities and improves AI results compared to simple code generation (there is a slide with a graph, but with a 40% axis).

https://www.youtube.com/watch?v=sal78ACtGTc

That is, agent-based artificial intelligence technologies may be the next step in the field of software development.