In the chats section on Chatbot Arena and in Perplexity playground appeared dbrx-instruct model (github ). I conducted a series of tests with code generation, and indeed the results are worthy. In addition, it is faster than CodeLLaMA -70B.

The developer of the VSCode plugin Doubleadded to GPT-4 Turbo and Claude 3 (Opus) also DBRX Instruct, although it is not very clear why and also opened a GPT-5 waitlist.

DataBricks, a company known for its data processing and analysis solutions, has released one of the most powerful and efficient open LLMs - DBRX. On the graphs that are published in the presentation post, the DBRX model outperforms other open solutions in the fields of mathematics and programming.

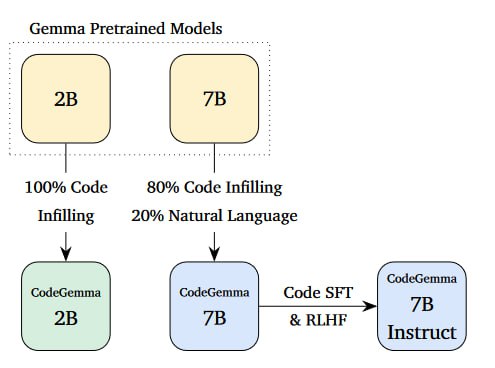

This MoE 16x12B multi-expert model (132 billion total parameters - 36 billion active parameters for processing each token), which in many tasks outperforms the open Grok-1 and the closed GPT-3.5 Turbo (but not Claude 3 Haiku). Context window 32k, tokenizer like GPT-4. Knowledge cutoff - December 2023.

They say that according to tests, they outperform CodeLLaMA-70B. The DBRX model is quite large in size so that not everyone can run it, but not as huge as Grok-1, which practically no one can deploy at home now. Meta plans to release Lllama 3 sometime in July.

The chat is also available at https://huggingface.co/spaces/databricks/dbrx-instruct

(5-shoot max)