Autocoder Updates

Cline v3.6.10

Starting from v3.6 they've added Cline API as a provider option, which lets newbies sign up and start using Cline for free (got a $0.5 gift myself) without own API keys.

They've started a separate site https://cline.bot/ where you can create an account (Google or GitHub only) and top it. Credits bought with a credit card come with a 5% + $0.35 + tax surcharge.

The site's got a blog where they share user stories. Also, all MCP plugins are now showcased at https://cline.bot/mcp-marketplace.

They've added a setting to toggle off model switching in Planning/Action modes (disabled by default for new users).

They've dropped a Cline Memory Bank prompt example into the docs.

For those keen to help polish the product, they've added an opt-in for telemetry, but keeping it off by default.

Roo Code v3.8.4

Nice to see some competition in the space.

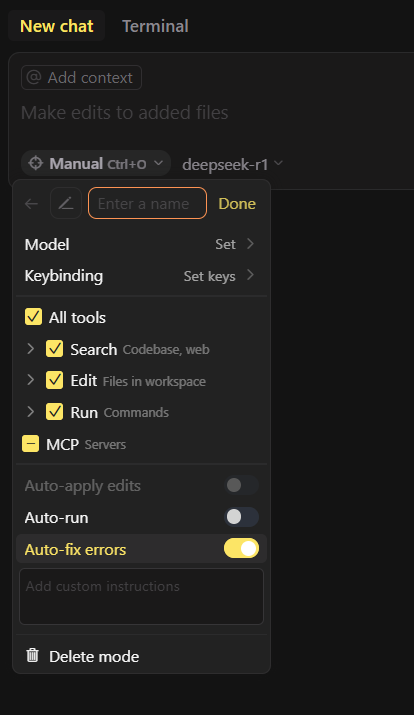

Roo's taking a different route; no MCP marketplace here, but they've beefed up the functionality for creating different modes with custom system prompts. .rooignore file support is added. Checkpoints, multi-window mode, and communication between subtasks and the main task are more polished.

They've tacked on "Human Relay" as a provider to the nifty The VS Code Language Model API mode, which lets you fire up models provided by other VS Code extensions (including, but not limited to, GitHub Copilot).

So, the plugin spits out a prompt, and we, the humans, do the relay: copy-paste it into a chat on the site, then paste the response back into the window. Right now, it's the only way to use Grok 3. Sure, it'd be slick if some OS-level agent handled this, but hey, it is what it is.

For those wanting to help with product improvement, they've added telemetry opt-in, asking on the first run if you're in.

#cline #roo #prompts #mcp